As a business owner, what do you fear about uncontrolled AI usage?

Is it employees uploading sensitive data to unauthorized tools? Departments creating AI policies in silos?

Most businesses struggle with scattered AI adoption that creates security risks, duplicates efforts, and misses opportunities. The right AI governance frameworks will turn that chaos into coordinated progress.

When Shadow AI Threatens Everything You've Built

The whispered conversations in break rooms reveal what management fears most: your teams are already using AI without telling you. They've discovered how much time it saves, but they're keeping quiet because they're afraid you'll take away a tool that makes their job easier.

At the University of Rochester, Brian Piper uncovered this reality while implementing their AI Council. "People were hiding the fact that they were using AI," he explains. "They didn't want their boss to know they were getting work done in half the time."

This shadow AI creates a dangerous undertow in your organization. Every time an employee uses an unauthorized AI tool, they're potentially exposing sensitive data. Your finance team might be copying spreadsheets into ChatGPT, not realizing their quarterly projections are now training the very models your competitors use. Your product team might upload proprietary designs, inadvertently surrendering intellectual property that took years to develop.

The Hidden Cost of Unmanaged AI

The risks extend well beyond data exposure. Without clear oversight, departments often adopt conflicting AI practices. Marketing may approve tools that IT restricts, while Sales experiments with methods Legal hasn’t vetted. This results in a patchwork of unaligned policies that breed confusion, frustration, and ultimately, serious compliance issues.

Even more insidious: the knowledge silos. Every AI success stays hidden instead of benefiting your entire organization. When your social media manager discovers a brilliant AI prompt sequence that triples engagement, that innovation remains locked in their personal account instead of becoming a company-wide asset.

Turning Shadow AI into Strategic Advantage

You face two choices: ignore this shadow AI and accept the risks, or turn hidden usage into open collaboration.

The solution isn't banning AI tools. Your employees have already discovered AI's power to compress time and enhance their work. They won't give that up. Instead, establish support structures that promote open dialogue around AI use and set clear boundaries for data protection.

Set up an AI Council that encourages transparency instead of punishing efficiency. "Now people are willing to share what they're doing with AI," notes Piper. This shift both reduces security risks and accelerates organization-wide innovation.

Taking this first step turns a potential threat into a strategic advantage. Instead of fearing shadow AI, you’ll tap into the initiative it reflects, while ensuring the protections your business requires. John Munsell explains this in depth in his book INGRAIN AI™ - Strategy Through Execution: The blueprint to scale and AI-first culture.

Building an AI Council

The difference between haphazard AI adoption and strategic change boils down to a single question: who's in the driving seat? Without central coordination, departments drift in different directions, creating pockets of innovation that never scale.

The AI Council is a strategic command center that accelerates adoption while maintaining security. When properly structured, it shifts how your entire organization thinks about and implements AI.

Building the Perfect Council

The composition of your AI Council matters more than its authority. You need a specific chemistry to drive meaningful change:

"The people that I thought were going to be the best advocates just don't have time," explains C.A. Clark, VP of Artificial Intelligence at Miles Partnership. "But the people with the least tenure were most aggressive about doing things and working with other people."

This insight led Clark to a smart approach: divide responsibilities into two focused groups. One steers high-level decisions and strategic direction. The other, composed of energetic, emerging leaders, focuses on hands-on execution across the organization.

The pAEi Balance

The ideal AI Council functions with what management expert Ichak Adizes calls a "pAEi" makeup—a perfect blend of:

- Sufficient Producers (p) who maintain momentum

- Strong Administrators (A) who build scalable structures and safeguards

- Powerful Entrepreneurs (E) who spot transformative opportunities

- Adequate Integrators (i) who help diverse personalities collaborate

This well-rounded mix solves three critical challenges at once:

- It prevents overregulation. Too many Administrators create rules that stifle innovation.

- It avoids chaos. Too many Entrepreneurs generate ideas without implementation structure.

- It maintains progress. The right mix ensures both protection and advancement.

From Guardians to Accelerators

Your AI Council's first mission isn't crafting lengthy policy documents. It's identifying quick wins that demonstrate AI's value while establishing essential protections.

Start by cataloging what's already happening. Which departments use which AI tools? What security measures exist? Where are the biggest vulnerabilities? This baseline assessment reveals your most urgent priorities.

Next, establish guidelines, not rigid policies. "The reason we went with guidelines is because policies have to be approved by a panel of people, and they're much more difficult to change," explains Brian Piper. "We know that AI changes very quickly."

This insight shapes everything. AI moves faster than traditional governance can adapt. By the time a rigid policy gets approved, the technology has evolved twice over. Instead, successful organizations build frameworks flexible enough to evolve alongside AI while maintaining necessary protections.

From Rigid Rules to Enabling Frameworks

Most organizations mishandle AI governance. They produce massive rulebooks no one reads and build systems so complicated that teams either ignore them or get tangled in red tape, abandoning AI innovation altogether.

But good oversight doesn’t constrain; it empowers. Clear, flexible boundaries give teams the confidence to innovate safely and accelerate adoption without hesitation.

The Three Critical Frameworks

Building effective AI governance means balancing three essential frameworks:

- Data Privacy: This is about compliance and trust. Set clear guidelines for what data can be used with which AI tools. Samsung's embarrassing leak of proprietary code in 2023 demonstrates what happens without these boundaries. Create simple, clear categories for data (public, internal, confidential, restricted) and specify which AI tools can access each level.

- Intellectual Property: Protect your company's creative assets while using AI's generative capabilities. Define who owns AI-generated content, establish processes for reviewing AI outputs before publication, and create explicit guidelines for how proprietary information interacts with AI systems.

- Ethical Use: This often-overlooked element might be your most important protection. Define not just what's legally permissible but what aligns with your values. Establish checks against bias, ensure transparency in AI-assisted decisions, and create clear accountability chains for AI implementations.

Building Guardrails That Accelerate Adoption

When teams know the boundaries, they innovate confidently within them without fear of crossing invisible lines.

Your governance frameworks should answer these questions clearly:

- Which AI tools are approved for which purposes?

- What data can be used with each tool?

- Who needs to review AI-generated content before external use?

- How do we document AI's role in decision-making?

- What ethical standards must all AI implementations meet?

When these questions have clear answers, your teams move faster rather than slower. They spend less time wondering "can we do this?" and more time asking "how can we do this better?"

The Canvas That Makes Implementation Possible

Nothing stalls AI adoption faster than abstract conversations that never lead to real outcomes. Your teams need more than broad principles, they need a clear, actionable approach that turns big-picture strategy into concrete steps.

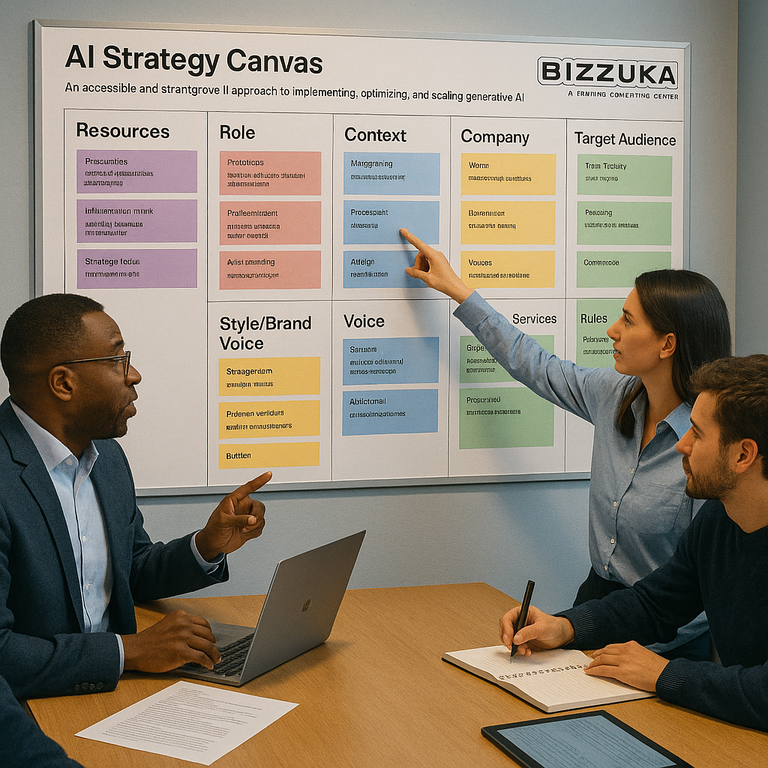

This is where the AI Strategy Canvas® will change everything about your governance approach.

The Nine Building Blocks That Change Everything

The AI Strategy Canvas is a structured tool designed to ensure every AI initiative addresses 9 critical elements:

- Target Audience: Who specifically benefits from this AI initiative?

- Company: What organizational context does the AI need to understand?

- Products/Services: Which offerings does this AI initiative support?

- Context: What market conditions or situations affect this initiative?

- Role: What specific function will AI serve in this context?

- Style/Brand Voice: How should the AI communicate to maintain brand consistency?

- Resources: What data, tools, and capabilities does the initiative require?

- Rules: What compliance, ethical, and operational guidelines apply?

- Request: What precise outcomes should the AI deliver?

This structured approach forces your teams to consider every factor that affects AI success. No more scattered initiatives or uncoordinated efforts. Every department uses the same framework to plan, implement, and evaluate AI projects.

From Chaos to Coordination

When marketing sits down with IT to develop an AI initiative, the canvas structures their conversation. Instead of speaking different languages, they address each building block methodically. Marketing articulates the audience's needs. IT clarifies resource requirements. Together, they establish appropriate rules.

"The moment I used the Strategy Canvas to start writing a prompt, everything started to fall into place," explains John Thompson, COO of Exepron, a project management software company. This structured approach turned his team's scattered AI efforts into coordinated progress.

Similarly, Tracy L. Norton, a law professor who teaches prompt engineering to other legal professors, found her entire approach to AI changed: "The approach made sense to me right away. As soon as I heard it, I thought, 'ah, that's it! That's what I've been looking for!' It was revelatory."

The canvas solves your governance challenges in three crucial ways:

First, it creates natural alignment between departments. When everyone uses the same framework, cross-functional collaboration becomes automatic rather than forced.

Second, it integrates oversight directly into implementation. The Rules block ensures every AI initiative incorporates proper controls from the start, not as an afterthought.

Third, it accelerates adoption by simplifying complexity. Teams spend less time wondering what to consider and more time creating value with AI.

Ready to change how your organization approaches AI? The AI Strategy Canvas offers a structured framework to align strategy with execution, bridging the gap between ambition and accountability. It equips cross-functional teams to navigate every critical aspect of AI initiatives, ensuring innovation and protection are built in from the start.

Download your free copy of the AI Strategy Canvas here and start building AI governance that works. Or grab a copy of INGRAIN AI, and learn how to create and scale an AI-first culture across your entire operation.