Reshaping the landscape of cybercrime

Imagine this: You're sitting at your desk, sipping your morning coffee, when you receive an urgent email from your CEO. The email contains a video message, and it's the CEO's voice, no doubt about it. They're requesting an immediate transfer of funds to a new vendor for a critical project.

The request seems a bit out of the ordinary, but it's the CEO's voice, and the project sounds important.

So, you proceed with the transaction. Only later do you discover that the CEO never sent that message.

You've been duped by a deepfake.

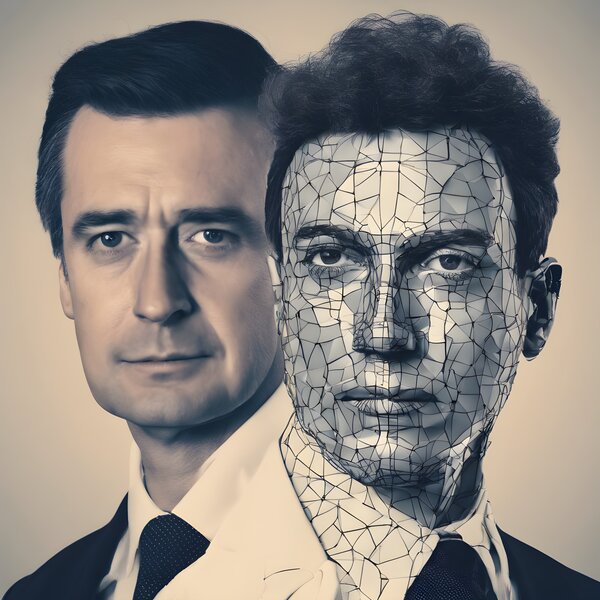

Deepfakes, a term coined from "deep learning" and "fake", are artificial intelligence (AI)-generated images, videos, voices, or texts that are so convincingly real, they can easily fool the human eye and ear10.

They're not just a novelty or a new tool for creating amusing Internet memes. They're a growing cybersecurity threat that can cause serious harm to individuals, businesses, and society at large37.

In the realm of cybersecurity, deepfakes are the new kid on the block, and they're quickly posing a significant threat. They're being used to impersonate executives, spread disinformation, manipulate public opinion, and even influence election outcomes136.

The quality of deepfakes has improved so much that even trained eyes and ears may fail to identify them.

The rise of deepfakes presents a unique challenge for businesses. They can disrupt business operations, facilitate fraud, and damage reputations.

Even if a company can prove it was the victim of a deepfake, the damage to its reputation and potential loss of revenues could already be done.

While the threat is real, understanding the nature of deepfakes and how they work is the first step towards protecting yourself and your organization. How much do you really know about the deepfake phenomenon and its potential impact on your organization?

What exactly is a deepfake?

Have you ever considered the possibility that the person you're watching or listening to isn't real?

What would you do if you couldn't trust your own eyes and ears anymore?

Deepfakes are not just a theoretical threat; they're causing real-world damage on a rapidly growing basis.

Take, for instance, the recent incident in Hong Kong where fraudsters faked an entire conference call. The scam was orchestrated using a deepfake video of the company's CEO, requesting the transfer of funds to multiple accounts.

The video was so convincing that the company's executives didn't suspect a thing until it was too late.

That key employee was tricked into transferring $25 million to accounts controlled by the fraudsters.

This incident is not an isolated case. Deepfakes are becoming a common tool in the arsenal of cybercriminals. They're being used to impersonate executives, trick employees into revealing sensitive information, and even to manipulate stock prices.

And the scary part is, the technology behind deepfakes is becoming more accessible and easier to use.

Today, anyone with a decent computer and some technical know-how can create a convincing deepfake.

But why are deepfakes so effective?

The answer lies in their ability to exploit one of our most basic human instincts: trust. We tend to trust what we see and hear. When we see a video or hear a voice, our brain automatically assumes it's real. Deepfakes take advantage of this instinct, creating illusions that are almost indistinguishable from reality.

Tools like Heygen, ElevenLabs, and Speechify are great marketing tools that allow you to clone your voice and video likeness in seconds. The positive use cases are numerous, but they also open the door for bad actors to clone anyone they like saying anything they like. The difference between real and cloned is sometimes hard to tell.

We’ve only just scratched the surface with this technology, so you can be sure that the fakes will only become more indistinguishable from authentic versions.

Consider the implications for your organization. How would your people discern truth from deception in a world where technology blurs the lines?

What about the trust you place in your digital communications every day—how easily could it be exploited by a well-crafted deepfake?

Think it can’t happen to you?

If you think your business is immune to deepfakes, you're living in a fantasy.

The threat of deepfakes doesn't always come from the outside; it can masquerade as an email or voicemail from within your organization, making it an invisible enemy.

The rise of identity fraud through AI-generated deepfakes is increasingly alarming. A survey by Regula, a leading identity verification solutions and forensic devices provider, recently revealed that 37% of organizations have encountered deepfake voice fraud and 29% have been targeted by deepfake videos.

Imagine receiving a voice message from your trusted colleague, asking for sensitive company data. It sounds exactly like them, but in reality, it's a deepfake engineered by a competitor.

This is not science fiction; it's a scenario that could happen to your business at any time. I should know. It happened to us.

About eight years ago, our bookkeeper received an email from me asking her to quickly wire $19,850 to another business in payment of an invoice that I’d overlooked. She scrambled to get all the necessary information and then wired the money.

The only problem was, that email didn’t come from me. It was a fake before the age of deepfakes.

We were fortunate enough to get our money back about 10 days later, but it was an excruciating 10 days.

To this day, we get about three of these emails every week. They’re even going so far as to spoof my email signatures and use domain names that are very close to ours.

Our previous scare was all we needed to develop a protocol to ensure no money goes anywhere without passing a multifactor authentication.

In the age of deepfakes, however, the concept of a "conversation handshake," a verification method reminiscent of old spy movies, is not just a clever plot device—it's become our newest tool in the arsenal of business security.

It's a simple yet effective way to confirm the authenticity of a request, adding an extra layer of defense against scammers.

It goes something like this: if someone calls our bookkeeper using what certainly sounds like my voice asking her to process a payment, she responds with a pre-arranged question.

For the sake of this post, let’s just say she responds with "Is this something I need to do right now, or can I run to the bathroom first?"

If the caller is indeed me, my response, based on our pre-arranged script, would be, "Well, are you sure no one's already in there?"

Any other response, and she knows it's a scam.

This simple yet effective strategy keeps scammers at bay and helps me sleep at night.

Are your security protocols sufficient to counter not just the threats you can see but also those you can't?

The evolution of deepfake technology challenges you to be ever more creative in your approaches to cybersecurity, ensuring you're prepared for whatever the future holds.

The rush for new legislation

The realization that anyone's likeness can be used without consent is a chilling wake-up call.

The high-profile case involving Taylor Swift, where her likeness was used without her consent in a deepfake, highlights the pressing need for comprehensive legislation.

Currently, there's a significant gap in the law when it comes to non-consensual deepfakes, particularly those of a personal and explicit nature. This legal void affects not only individuals but also businesses and their leaders, who may find themselves the targets of damaging deepfake attacks.

Another notable case is that of the late comedian George Carlin. His voice was resurrected using AI to promote a product, raising ethical and legal questions about the use of a person's likeness after their death.

These incidents underscore the complexity of the deepfake issue, which straddles the realms of technology, law, and ethics.

In the United States Congress, a proposed bill aims to hold creators of malicious deepfake content accountable. However, the legislative process can be slow, and until such laws are in place, businesses are left to navigate a murky legal landscape.

This uncertainty adds another layer of complexity to the deepfake dilemma. Companies must not only tackle the technological challenges but also understand and prepare for potential legal ramifications.

It's a multifaceted problem that calls for a multifaceted solution, combining technology, policy, and education.

As we grapple with these challenges, it's crucial to remember that prevention is often better than cure. By staying informed about the legal context and potential implications of deepfakes, businesses can better prepare for and protect against this emerging threat.

This proactive approach is about more than just preventing financial loss; it's about safeguarding the very integrity of our business identities in an increasingly digital world.

Deepfakes call for advanced security measures

Deepfakes will make traditional cybersecurity obsolete within the next decade.

We're entering a new era where seeing is no longer believing, and hearing is no longer proof. In this new reality, businesses need to rethink their security strategies and adopt new measures to protect themselves against the threat of deepfakes.

Passwords, even two-factor authentication, can be bypassed by someone who convincingly mimics a CEO's voice or appearance.

The solution?

A blend of advanced security measures designed to outsmart the deepfake technology itself.

One security measure turning heads is digital watermarking.

This technology embeds a hidden marker in digital content that can verify its authenticity. It's like a digital signature that's invisible to the naked eye but can be detected by the right tools.

For businesses, embedding digital watermarks in official communications could be a revolutionary, making it harder for deepfakes to pass as genuine.

Another cutting-edge solution is the use of blockchain technology.

By creating a decentralized ledger of digital assets and communications, blockchain can provide a tamper-proof record of authenticity.

Imagine if every photograph, video, email, or document sent within your company could be verified against a blockchain record. The integrity of your communications would be significantly harder to compromise.

That’s precisely the problem that Sandy Carter of Unstoppable Domains seeks to solve. Sandy is one of the world's leading voices in blockchain technology when it comes to securing your digital likeness in the face of growing deepfake threats. Unstoppable Domains essentially tokenizes your digital likeness, so authenticity is recorded on the blockchain and easily verifiable.

Artificial Intelligence itself, the very technology that powers deepfakes, can also be part of the solution.

AI-driven systems can be trained to detect the subtle signs of deepfake content, such as irregular blinking patterns or unnatural speech rhythms. These systems can continuously learn and adapt, staying one step ahead of the evolving deepfake technology.

Implementing these advanced security measures requires a shift in mindset.

It's no longer enough to defend against the threats we know; we must anticipate and neutralize the threats of tomorrow. This proactive approach to cybersecurity can seem daunting, but it's essential in a world where the lines between reality and digital deception are increasingly blurred.

The importance of staying informed and agile cannot be overstated.

The deepfake threat may be formidable, but businesses can protect themselves against this invisible enemy with the right tools and strategies.

Artificial Intelligence can be a double-edged sword

Navigating the AI landscape requires more than enthusiasm; it demands vigilance because Artificial Intelligence is a double-edged sword.

On one hand, it offers incredible opportunities for efficiency and innovation. On the other, it presents new challenges, like the creation of deepfakes, that can undermine trust and security.

The key is to wield this sword with skill and foresight, taking advantage of AI's potential while defending against its risks.

As a business leader, your imperative is clear: understand AI not just as a tool, but as a field that's rapidly evolving.

It's not enough to implement AI solutions; you must also stay abreast of how AI can be used against the interests of your company.

This means investing in AI literacy across your organization, ensuring that employees are not only proficient in using AI but also in recognizing its misuse.

AI can streamline operations, personalize customer experiences, and unlock data-driven insights that were previously inaccessible. However, as you integrate AI more deeply into your business processes, you’ll need to amp up your security protocols.

This involves regular audits of AI systems, ethical AI training, and the establishment of clear guidelines for AI use.

The balance between implementing AI for growth and protecting against its threats is delicate. It requires a strategic approach, where every new AI implementation is accompanied by a thorough risk assessment.

This is the best way to enjoy the fruits of AI innovation while maintaining a solid defense against the potential perils it brings.

In navigating this balance, the role of leadership is crucial. As a leader, you must foster a culture of responsible AI use, where the ethical implications of AI are considered just as important as its business benefits.

This culture is the bedrock upon which a secure and prosperous future in the age of AI is built.

Going analog with a human firewall

How well is your team equipped to stand as the first line of defense against deepfakes?

While technology plays a crucial role in combating deepfakes, the human element should not be overlooked.

After all, it's often humans who are the targets of deepfake attacks. By empowering employees with knowledge and tools, businesses can create a 'human firewall' against deepfakes.

Education is the first step.

Regular training sessions can help your employees understand what deepfakes are, how they work, and the threats they pose.

This knowledge can make your employees more vigilant and less likely to fall for deepfake scams.

For instance, they might think twice before acting on an unusual video request from a senior executive, or they might be more skeptical of an email that doesn't quite sound like the person it's supposed to be from.

But education alone is not enough.

You also need to foster a culture of security, where employees feel responsible for protecting the organization against threats.

This means encouraging employees to report suspicious activity, even if it turns out to be a false alarm.

It also means creating an environment where employees feel comfortable asking questions and challenging requests that seem out of the ordinary.

Finally, you can equip your employees with tools to detect and respond to deepfakes.

This could include software that can identify deepfake content or protocols for verifying the authenticity of requests. For example, the 'conversation handshake' we implemented in our own company is a simple yet effective tool that any business can adopt.

In the fight against deepfakes, every employee has a role to play. By empowering employees, you can strengthen your defenses and turn your workforce into a powerful weapon against this emerging threat.

After all, in the battle against deepfakes, knowledge is not just power—it's protection.

The age of deep fakes demands vigilance

Are you ready to defend your business in a world where deepfakes blur the line between truth and fiction?

The threat posed by deepfakes is both real and multifaceted, challenging businesses to fortify their defenses with advanced security measures, legal awareness, and a well-informed workforce.

The journey to a secure business environment in the age of AI is not a sprint but a marathon, requiring continuous vigilance, adaptation, and education.

It's about building resilience not just in your technology but in your people and your processes. As you embrace the benefits of AI, you must also brace yourself for its potential misuse, ensuring that your business remains trusted, secure, and ahead of the curve.

Remember, the goal is not to instill fear but to inspire action.

The deepfake threat, while daunting, is not insurmountable. With the right combination of technology, strategy, and human insight, you can navigate these uncharted waters and emerge stronger.

How will you respond to the challenge of deepfakes? Will you wait for the storm to hit, or will you start reinforcing your defenses today?

We invite you to take action.

Schedule a call with us to discuss our corporate training programs, designed to upskill your employees and equip them with the knowledge and tools to increase productivity, help your company grow, and at the same time equip them with the awareness and skills to combat deepfake threats.

We offer bespoke training for larger groups, ensuring that your entire team is prepared.

Our ongoing Accelerated AI Mastery class provides a comprehensive understanding of AI technologies where all participants gain executional skills, not just conversational ones.

Don't wait for the unseen enemy to strike. Take the first step towards safeguarding your business today.